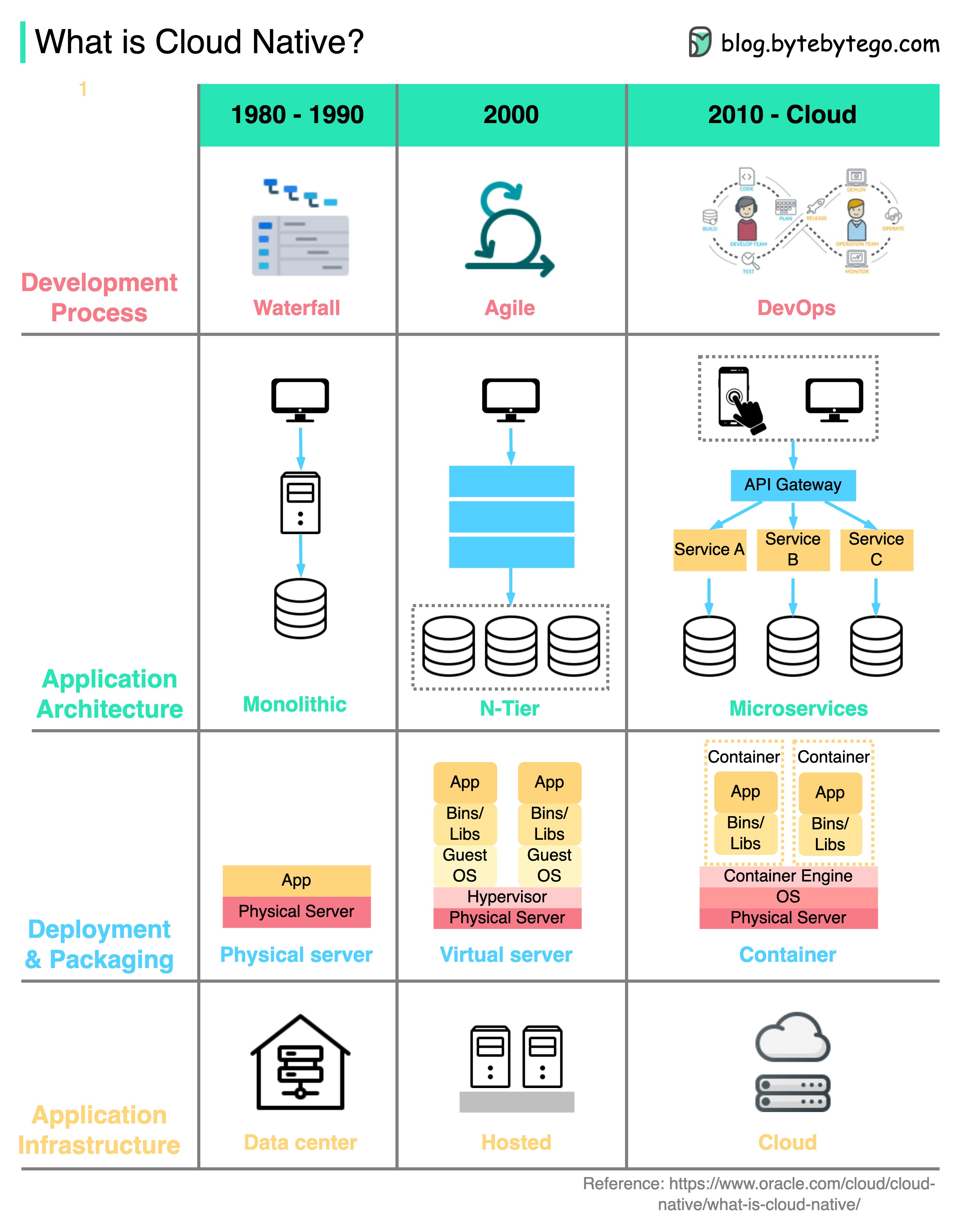

The term “cloud-native” has become ubiquitous in modern software development and IT infrastructure. It signifies a fundamental shift in how applications are designed, built, deployed, and managed, leveraging the inherent advantages of cloud computing. Unlike traditional monolithic applications that are often migrated to the cloud with minimal changes, cloud-native applications are conceived from the ground up with the cloud in mind. This approach promises greater agility, scalability, resilience, and faster innovation cycles, making it a cornerstone of digital transformation for many organizations.

At its core, a cloud-native application is an application that is built and run specifically to take advantage of the cloud computing delivery model. This doesn’t just mean running an application on a cloud provider’s virtual machines. Instead, it involves embracing a set of architectural principles and adopting specific technologies that enable applications to thrive in dynamic and distributed cloud environments. These principles are designed to address the unique challenges and opportunities presented by the cloud, such as elasticity, self-healing, and on-demand resource provisioning.

The journey to cloud-native is not merely a technological upgrade; it’s a cultural and operational evolution. It requires a change in mindset, fostering collaboration between development and operations teams (DevOps), and embracing automation at every stage of the application lifecycle. The goal is to create applications that are not only efficient and cost-effective to run in the cloud but also highly adaptable to changing business needs and market demands.

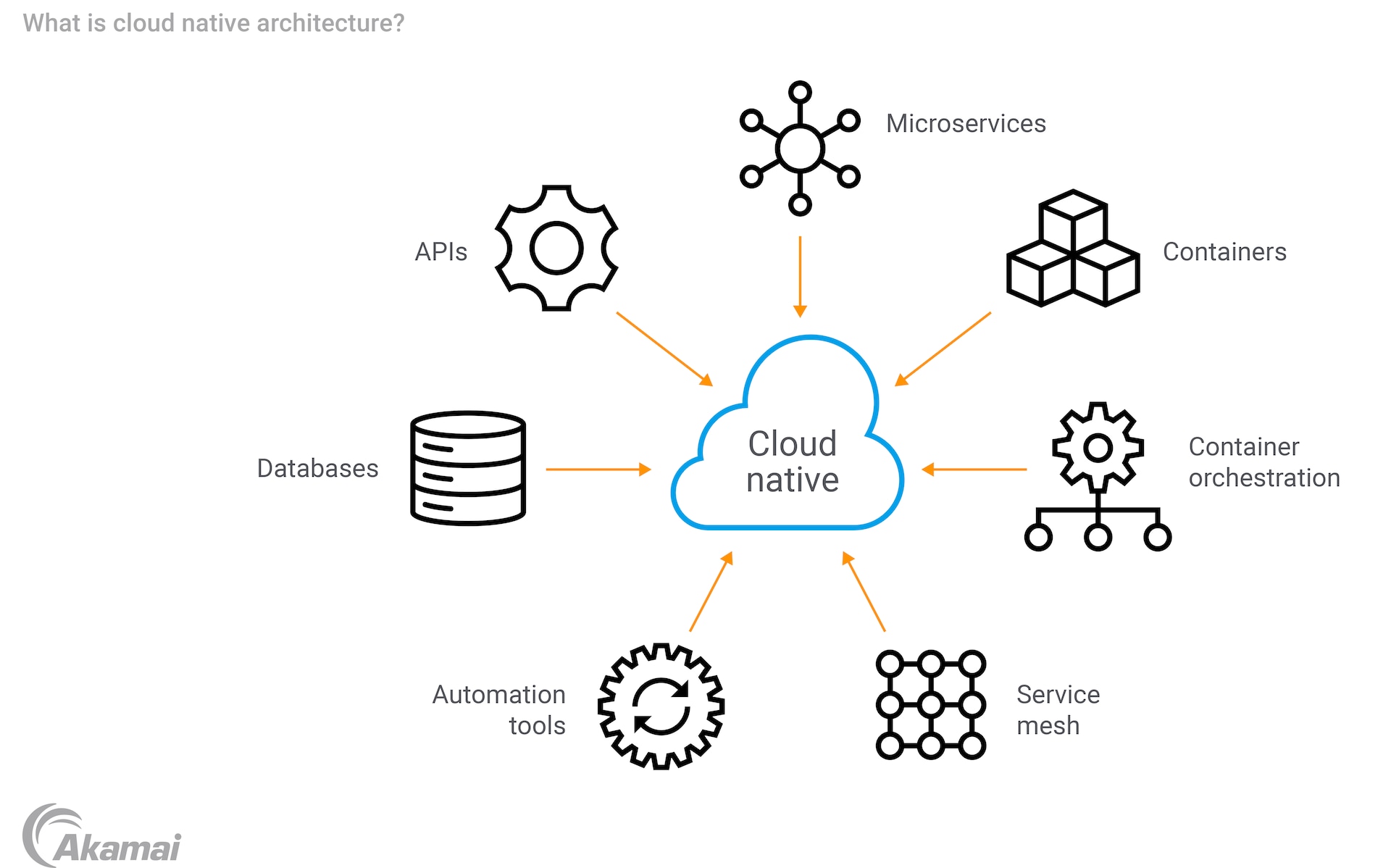

The Pillars of Cloud-Native Architecture

Cloud-native applications are built upon a foundation of specific architectural patterns and technologies that empower them to harness the full potential of the cloud. These pillars work in concert to deliver the agility, scalability, and resilience expected from modern software. Understanding these core components is crucial for comprehending what makes an application truly cloud-native.

Microservices Architecture

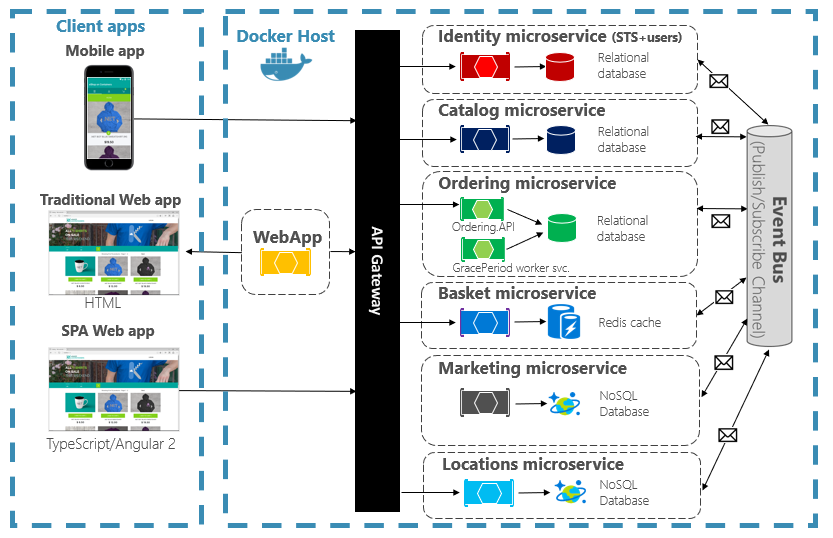

One of the most defining characteristics of cloud-native applications is their adoption of a microservices architecture. Instead of a large, monolithic application, a cloud-native application is broken down into a collection of small, independent services, each responsible for a specific business capability. These services communicate with each other, typically over lightweight protocols like REST APIs or asynchronous messaging queues.

Independence and Loose Coupling

The independence of microservices is a key benefit. Each service can be developed, deployed, scaled, and updated independently of others. This means a change to one service doesn’t necessitate a redeployment of the entire application. This loose coupling significantly speeds up development cycles and allows teams to specialize in specific services, fostering greater efficiency and expertise. If one microservice fails, it doesn’t necessarily bring down the entire application, contributing to improved resilience.

Technology Heterogeneity

Microservices also allow for technology heterogeneity. Different services can be built using the most appropriate programming language, framework, or database for their specific task. For example, a service handling computationally intensive tasks might be written in Python, while a high-throughput transactional service could be built with Java. This flexibility allows developers to choose the best tools for the job, rather than being constrained by a single technology stack for the entire application.

Containerization

Containerization is another fundamental technology that underpins cloud-native development. Technologies like Docker allow developers to package an application and its dependencies into a standardized unit called a container. This container provides an isolated environment where the application can run consistently, regardless of the underlying infrastructure.

Consistent Environments

Containers ensure that an application runs the same way in development, testing, and production. This eliminates the “it works on my machine” problem and reduces deployment friction. The application’s environment, including libraries, binaries, and configuration files, is bundled within the container image, guaranteeing consistency across different machines and cloud environments.

Portability and Isolation

Containers offer excellent portability. A container built on a developer’s laptop can be easily moved and run on any cloud platform or on-premises server that supports containerization. This portability simplifies deployment and migration processes. Furthermore, containers provide a degree of isolation, preventing applications from interfering with each other and enhancing security.

Orchestration

While containerization provides the packaging, orchestration is essential for managing a large number of containers effectively in a distributed cloud environment. Container orchestration platforms, most notably Kubernetes, automate the deployment, scaling, and management of containerized applications.

Automated Deployment and Scaling

Orchestrators handle the complex task of deploying containerized applications across a cluster of machines. They can automatically scale applications up or down based on demand, ensuring that the application can handle fluctuating workloads. This dynamic scaling is a hallmark of cloud-native resilience and cost-efficiency.

Self-Healing and Resilience

Orchestration platforms also provide self-healing capabilities. If a container crashes or a node becomes unavailable, the orchestrator can automatically restart the container or reschedule it on a healthy node. This built-in resilience ensures that applications remain available even in the face of failures.

DevOps and Continuous Delivery

Cloud-native development is intrinsically linked with DevOps practices and a commitment to Continuous Delivery (CD). DevOps is a set of practices that combines software development (Dev) and IT operations (Ops) to shorten the systems development life cycle and provide continuous delivery with high software quality.

Automation and CI/CD Pipelines

Automation is paramount in cloud-native environments. Continuous Integration (CI) and Continuous Delivery (CD) pipelines automate the building, testing, and deployment of applications. This enables developers to release new features and bug fixes rapidly and reliably, fostering agility and responsiveness to business needs. Every code change can be automatically built, tested, and, if successful, deployed to production with minimal human intervention.

Collaboration and Culture

Beyond tooling, DevOps emphasizes a culture of collaboration and shared responsibility. Developers and operations teams work together throughout the application lifecycle, breaking down traditional silos. This collaborative approach streamlines processes, improves communication, and leads to more robust and well-managed applications.

Key Benefits of Cloud-Native Applications

Adopting a cloud-native approach offers a compelling set of advantages that drive business value and innovation. These benefits are not just theoretical; they translate into tangible improvements in operational efficiency, market responsiveness, and customer satisfaction.

Enhanced Agility and Faster Time-to-Market

The microservices architecture, combined with containerization and CI/CD pipelines, significantly accelerates the pace of development and deployment. Teams can work on different services independently, allowing for parallel development and faster release cycles. This agility enables organizations to respond quickly to market changes, customer feedback, and emerging opportunities, giving them a competitive edge.

Incremental Development and Deployment

Instead of lengthy, infrequent releases of large monolithic applications, cloud-native development allows for frequent, incremental updates. This means that new features and improvements can be delivered to users much faster, and if an issue arises with a new release, it can be rolled back quickly without impacting the entire system.

Reduced Risk of Deployment Failures

With smaller, independent services and automated testing within CI/CD pipelines, the risk of deployment failures is substantially reduced. Each deployment is for a smaller unit of change, making it easier to identify and fix issues. If a deployment does encounter problems, the impact is often localized to a specific service, and rollback procedures are typically faster and more straightforward.

Improved Scalability and Elasticity

Cloud-native applications are designed to scale dynamically and elastically. They can automatically adjust their resource consumption based on real-time demand, ensuring optimal performance and cost-efficiency. This elasticity is a core advantage of the cloud, allowing applications to handle sudden spikes in traffic without performance degradation and scale down to save resources during periods of low activity.

Handling Variable Workloads

Whether it’s a retail application experiencing a surge in traffic during a holiday sale or a streaming service during a popular event, cloud-native applications can seamlessly scale up to meet the demand. Conversely, they can scale down when demand subsides, preventing over-provisioning of resources and reducing operational costs.

Cost Optimization

By scaling resources up and down precisely as needed, organizations can achieve significant cost savings. They only pay for the computing resources they actually consume, avoiding the inefficiencies of maintaining over-provisioned infrastructure to handle peak loads.

Increased Resilience and Availability

Cloud-native architectures are inherently designed for resilience. Through microservices, containerization, and orchestration, applications can withstand failures and continue to operate with minimal disruption.

Fault Isolation and Graceful Degradation

In a microservices architecture, the failure of a single service doesn’t necessarily mean the entire application goes down. Orchestration platforms can detect failing services and automatically restart them or redirect traffic to healthy instances. This fault isolation contributes to a more robust and available system. In cases where a critical service is unavailable, applications can be designed to gracefully degrade functionality, offering a reduced but still usable experience to users.

Automatic Recovery Mechanisms

Container orchestrators like Kubernetes implement sophisticated self-healing mechanisms. They continuously monitor the health of containers and pods, automatically restarting or rescheduling them if they become unresponsive. This automated recovery process ensures that applications remain operational without manual intervention, even in the event of hardware failures or network issues.

The Technology Stack Behind Cloud-Native

Building and running cloud-native applications involves a rich ecosystem of technologies and tools. While the specific choices can vary, certain categories of technology are fundamental to the cloud-native paradigm.

Container Runtimes and Orchestrators

At the heart of cloud-native infrastructure lies containerization. Docker is the de facto standard for creating and running containers. However, managing hundreds or thousands of containers across multiple machines requires an orchestration layer.

Kubernetes as the De Facto Standard

Kubernetes has emerged as the dominant container orchestration platform. It automates the deployment, scaling, and management of containerized applications. Kubernetes provides a robust framework for managing the lifecycle of containers, including scheduling, load balancing, service discovery, and self-healing. Its extensibility and vast community support have made it the go-to choice for organizations adopting cloud-native strategies.

Other Orchestration Options

While Kubernetes is dominant, other orchestration solutions exist, such as Docker Swarm (less prevalent now) and cloud provider-specific services like Amazon Elastic Container Service (ECS) and Azure Kubernetes Service (AKS) which are often managed Kubernetes offerings.

Service Mesh and API Gateways

As applications grow in complexity with numerous microservices, managing inter-service communication, security, and observability becomes challenging. Service meshes and API gateways address these challenges.

Enabling Inter-Service Communication

A service mesh, like Istio or Linkerd, provides a dedicated infrastructure layer for managing service-to-service communication. It handles tasks such as traffic management, load balancing, authentication, authorization, and observability between microservices. By abstracting these concerns, developers can focus on business logic rather than infrastructure plumbing.

Centralized Entry Point and Security

API Gateways act as a single entry point for all client requests to the backend services. They handle tasks like request routing, authentication, rate limiting, and response transformation. API Gateways are crucial for managing external access to cloud-native applications, ensuring security and providing a consistent API for clients.

Observability Tools

In a distributed system of microservices, understanding what’s happening within the application is critical for troubleshooting and performance optimization. Observability tools provide deep insights into application behavior.

Monitoring, Logging, and Tracing

Observability encompasses three key pillars:

- Monitoring: Collecting metrics about application performance and resource usage. Tools like Prometheus and Grafana are popular for this.

- Logging: Aggregating and analyzing logs from all services to understand events and errors. Elasticsearch, Fluentd, and Kibana (the EFK stack) or Loki are common choices.

- Tracing: Tracking requests as they traverse multiple microservices to identify bottlenecks and diagnose performance issues. Jaeger and Zipkin are widely used tracing systems.

Cloud Platforms and Infrastructure as Code (IaC)

Cloud-native applications are typically deployed on cloud platforms (public, private, or hybrid). The way this infrastructure is managed is also crucial.

Leveraging Cloud Provider Services

Public cloud providers like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP) offer a wide array of managed services that are essential for cloud-native development, including managed Kubernetes, serverless functions, databases, and messaging queues.

Infrastructure as Code (IaC)

Tools like Terraform and Ansible are used to define and manage infrastructure through code. This approach ensures that infrastructure is provisioned in a consistent, repeatable, and version-controlled manner, which is essential for the automated deployment and management of cloud-native applications.

The Future of Cloud-Native and Its Impact

The cloud-native paradigm is not a static destination but an evolving journey. As technologies mature and new approaches emerge, the landscape of cloud-native development will continue to transform, impacting how software is built and delivered across industries.

Serverless Computing and Edge Computing

Serverless computing, where cloud providers manage the underlying infrastructure and developers only focus on writing code (e.g., AWS Lambda, Azure Functions), is a natural extension of the cloud-native philosophy. It further abstracts away infrastructure concerns, allowing for even greater agility and cost efficiency for event-driven workloads.

Edge computing, which brings computation and data storage closer to the source of data generation (e.g., IoT devices), is also increasingly being integrated with cloud-native principles. This allows for faster processing of data and reduced latency for applications that require real-time responsiveness.

Growing Importance of Security and Governance

As cloud-native applications become more complex and distributed, ensuring security and robust governance becomes paramount. The focus is shifting towards “shift-left” security, integrating security considerations earlier in the development lifecycle, and implementing automated security policies across the entire cloud-native ecosystem.

DevSecOps and Policy Enforcement

DevSecOps integrates security practices into the DevOps workflow, fostering collaboration between development, security, and operations teams. This ensures that security is not an afterthought but a core component of the entire application lifecycle. Automated policy enforcement, leveraging tools like Open Policy Agent (OPA), ensures that deployments adhere to organizational security and compliance requirements.

AI and Machine Learning Integration

The ability of cloud-native applications to be agile and scalable makes them ideal platforms for integrating AI and ML capabilities. Complex ML models can be deployed and managed as microservices, and their outputs can be consumed by various applications. The continuous delivery nature of cloud-native development also facilitates the rapid iteration and deployment of ML models.

Data-Intensive Workloads

Cloud-native architectures are well-suited for handling the data-intensive workloads that are characteristic of AI and ML. They can scale to process vast amounts of data for training models and can serve predictions at scale to end-users.

In conclusion, cloud-native applications represent a fundamental shift in how software is conceived, built, and operated. By embracing microservices, containerization, orchestration, and DevOps principles, organizations can unlock unprecedented levels of agility, scalability, and resilience, positioning themselves for success in the dynamic digital landscape of today and tomorrow.